Don Berkich

Professionally

Personally

ContactEmail: Phone: (361) 825-3976 (office) Office: Don Berkich, Ph.D Summer 2025 Courses:PHIL 2306.001: Introduction to Ethics (MTWR 10:00-11:55, CS-114) Summer 2025 Student Hours:MTWR 12:00-1:30 and by appointment. Please feel free to email or text me (361-944-2756) to schedule an appointment for a meeting. Publications:"The College of Unconventional Applied Arts and Sciences: A Prospectus" (pdf preprint) In The Danish Yearbook of Philosophy Special Issue: Revisiting the Idea of the University. Søren S.E. Bengtsen and Asger Sørensen, eds., Vol. 52 No. 1 (November 2019) pp. 139-151. The plodding rate of change within higher education make it ill-suited to anticipate challenges rapidly looming in government and corporate sectors. This prospectus outlines those challenges and describes a bold solution. If implemented, it would signal a less hidebound, more adroit institution of higher education to better serve students, business, and society, while fostering a new future for higher education "Introduction" (pdf preprint) In “On the Cognitive, Ethical, and Scientific Dimensions of Artificial Intelligence: Themes from IACAP 2016”, Philosophical Studies Series, Vol. 134, Don Berkich and Matteo D’Alfonso eds. (Springer Nature Switzerland: 2019) pp. 1-23. The intersection between philosophy and computing is curiously expansive, as the articles in this volume amply demonstrate. New vistas of inquiry are being discovered and explored in a way that neither field alone, philosophy nor computer science, would suggest. An exemplar for the fruitfulness of interdisciplinary work can be found in the International Association for Computing and Philosophy (IACAP). At its inception in the mid-1980’s, Computing and Philosophy conferences were almost wholly devoted to discussing the pedagogical uses of the freshly-deployed desktop computer. Much of that work now seems quaint in light of the many ways in which computers and networks have subsequently become integral to the functioning of the modern university’s educational mission. Yet it is interesting to note that philosophers were ’early adopters’, front and center in discussions of how best to adapt the computer to help in teaching. This pedagogical focus would persist through the early 1990’s. However, the long history tying philosophical, mathematical, and computational investigations together (the work of Hobbes and Leibniz looms particularly large in this regard) would soon draw philosophical and mathematical logicians, computer scientists, neuro-scientists, ethicists, roboticists, psychologists, information theorists, and philosophers of mind into discussions at turns historical, foundational, and applicable. In the subsequent decades, individual threads of inquiry and subsequent discussions have been woven into the fabric of important research agendas well furthered by papers presented at the 2016 meeting of the International Association for Computing and Philosophy at the University of Ferrara, Ferrara, Italy, June 14-17. Hosted by Professors Marcello DAgostino and (my co-editor) Matteo DAlfonso, IACAP 2016 was graciously sponsored by the University of Ferrara, the Department of Economics and Management, and by the Dipartimento di Studi Umanistici. The 21 contributions to this volume neatly represent a cross section of 40 papers, 4 keynote addresses, and 8 symposia as they cut across fully six distinct research agendas. Now, I take it that an editor’s duty is not merely to describe the ways in which the contributions further the research agendas, but to help frame and better set those agendas for readers and researchers alike. Briefly, then, this volume begins with foundational studies in (1) Computation and Information, (2) Logic, and (3) Epistemology and Science. Research into computational aspects of (4) Cognition and Mind leads neatly into (5) Moral Dimensions of Human-Machine Interaction, followed finally by broader social and political investigations into (6) Trust, Privacy, and Justice. "Machine Intentions" (pdf preprint) Featured article in The APA Newsletter on Philosophy and Computers, Vol. 18 No. 1 (Fall 2018) pp. 3-10. Debate between philosophical skeptics and researchers in artificial intelligence has been rich, penetrating, and fruitful, if frustrating at times. Rarely speaking past one another, the skeptics set conceptual markers drawn from the philosophy of mind to caution against reading too much into the successes of AI. Yet claims on both sides are prone to excess. For instance, philosophical skeptics of developments in robotics have found their rarely contested assumption that a robot can act of its own accord puzzling: Why should we think that a mere artifact, no matter how complicated, could ever have the capacity to act of its own accord given that its purpose and function is completely determined by its design specification? The skeptic’s intuition is that machine agency is deeply incompatible with machine-hood in just the way it is not with person-hood. Thus the actions of situated robots like the Mars rovers cannot be more than a mere extension of the roboticist’s agency inasmuch as the robot’s design tethers it to the roboticist’s intentions. In this essay I analyze an early, albeit important skeptical marker to plea for moderation on both sides. "The Problem of Original Agency" (pdf preprint) In The Southwest Philosophy Review, Vol. 33 No. 1 (January 2017) pp. 75-82. The problem of original intentionality–wherein computational states have at most derived intentionality, but intelligence presupposes original intentionality–has been disputed at some length in the philosophical literature by Searle, Dennett, Dretske, Block, and many others. Largely absent from these discussions is the problem of original agency: Robots and the computational states upon which they depend have at most derived agency. That is, a robot’s agency is wholly inherited from its designer’s original agency. Yet intelligence presupposes original agency at least as much as it does original intentionality. In this talk I set out the problem of original agency, distinguish it from the problem of original intentionality, and argue that the problem of original agency places as much of a limit on computational models of cognition and is thus at least as vexing as the problem of original intentionality. "The Agora" (pdf preprint) In The Journal of the Philosophy of Education, Vol. 47 No. 3 (August 2013) pp. 379-390. Student Learning Outcomes are increasingly de rigueur in U.S. higher education. Usually defined as statements of what students will be able to measurably demonstrate upon completing a course or program, proponents argue that they are essential to objective assessment and quality assurance. Critics contend that Student Learning Outcomes are a misguided attempt to apply corporate quality-enhancement schemes to higher education. It is not clear whether faculty should embrace or reject Student Learning Outcomes. With sincere apologies to Plato, this dialogue explores arguments for and against their use. "A Heinous Act" (pdf preprint) In Philosophical Papers, Vol. 38 No. 3 (November 2009) pp. 381-399. See http://www.informaworld.com/rppa Intuitively, rape is seriously morally wrong in a way simple assault is not. Yet philosophical disputes about the features of rape that make it the heinous act it is invite a general account of the difference between (mere) wrong-making characteristics and heinous-making characteristics. In this paper I propose just such an account and use it to refute some accounts of the wrongness of rape and refine others. Given these analyses, I close by developing and defending an account of a particularly important heinous-making characteristic of rape. "NA-CAP at IU-2009: Networks and Their Philosophical Implications, June 14-16" (pdf) In The Reasoner, vol. 3, no. 7, July 2009. A brief report on the 2009 North American Computing and Philosophy conference at the University of Indiana-Bloomington on networks and their philosophical implications. "Autonomous Robots" (pdf) In The APA Newsletter on Philosophy and Computers, Spring 2009, Vol. 8, No. 2. “Autonomy” enjoys much wider application in robotics than philosophy. Roboticists dub virtually any robot not directly controlled by a human agent “autonomous” regardless of the extent of its behavioral repertoire or the complexity of its control mechanisms. Yet it can be argued that there is an important difference between autonomy as not-directly-controlled and autonomy as self-control. With all due respect to the enormous achievement Mars rovers Spirit and Opportunity represent, in this paper I argue that the roboticists' conception of autonomy is over-broad in the sense that it is too easily achieved, uninteresting, and ultimately not especially useful. I then propose a theory of autonomous agency that capitalizes on the roboticists' insights while capturing the philosophical conception of autonomy as self-rule. I close by describing some of the capacities autonomous agency so construed presupposes and explaining why those capacities make it a serious challenge to achieve while arguing that it is nonetheless an important goal. "NA-CAP at IU-2008: The Limits of Computation, July 10-12" (pdf) In The Reasoner, vol. 2, no. 8, pp. 7-8, August 2008. A brief report on the 2008 North American Computing and Philosophy conference at the University of Indiana-Bloomington on the limits of computation. "On Two Solutions to Akrasia" (pdf) In Philosophical Writings, no. 33, pp. 34-52, Autumn 2006 (appearing Spring 2008) In ancient and contemporary discussions of weakness of will, or akrasia, Aristotle and Davidson have articulated two of the more seminal accounts. Yet drawing a sharp distinction between the conditions on akratic agency, the reasons why it poses a problem, and solutions in the accounts of Aristotle and Davidson makes clear that Davidson's rejection of Aristotle's solution is illicit insofar as his own solution is, at root, aristotelian. "A Puzzle about Akrasia" (pdf) In Teorema, vol. 26 No. 3, pp. 59-71, Fall 2007. Attempts to articulate necessary and sufficient conditions on akratic agency reveal a curious technical difficulty which suggests a novel solution to the problem of akrasia: The akrates rationalizes her akratic intention against her better judgment by exploiting a persistent gap in the identification of her own beliefs. Here I motivate and defend this analysis of akrasia and discuss some of the intriguing questions it raises about the nature of belief self-attribution. "Ethics Online: A Plea for Open Source" (pdf) In Humanities and Technology Review, vol. 26, pp. 49-64, Fall 2007. Should the Humanities embrace Open Source software? In this paper I explain the concept of Open Source Software and argue for its use in the Humanities by presenting a case study of improved functionality in the code used to implement asynchronous and anonymous online moral discussions. "A Fallacy in Potentiality" (pdf) In Dialogue vol.46 no.1, pp. 137-150, Winter 2007. A popular response to proponents of embryonic stem cell research and advocates of abortion rights alike--summarized by claims like “you came from an embryo!” or “you were a fetus once!”--enjoys a rich philosophical pedigree in the arguments of Hare, Marquis, and others. According to such arguments from potentiality, the prenatal human organism is morally valuable because every person's biological history depends on having completed embryonic and fetal stages. In this paper I set out the steps of the underlying argument in light of how it has been cast in the philosophical literature and uncover an intriguingly illicit inference. "Introduction to Logic" With Keith Coleman, Lawrence, Kansas: The University of Kansas Press, 1991. 135p. A study-guide to accompany Copi and Cohen's Introduction to Logic, written under contract with the University of Kansas Division of Continuing Education and the University of Kansas Press. |

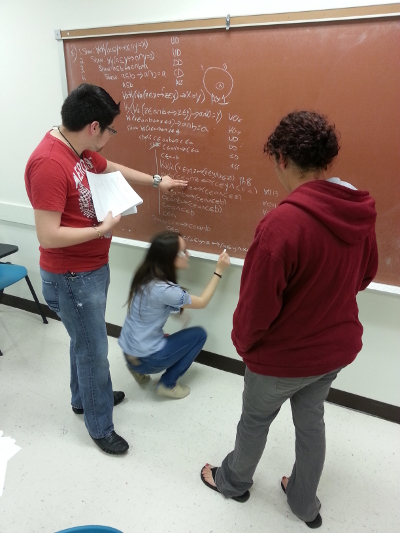

Lecture photos courtesy Jeffrey Janko, University Photographer.

|